Nasa Scaffold 3D

Scaffold 3D is an extended reality interface for the teleoperation of robots for dexterous manipulation tasks, over a medium time delay. The interface was initially designed for the Zspace 3D stereo display and augmented reality device, for the teleoperation of Robonaut - NASA’s humanoid robot but can be adapted for different devices and robots. Design development, user evaluation, and prototyping was conducted using the Kinova Jaco robot arm.

The interface design addresses key manipulation and time delay challenges to enable users to safely and intuitively teleoperate robots. It predicts future error probability; prevents errors by guiding the user away from high risk operations; plans ahead, at least 2 time delays in advance; leverages autonomy of the robot to react earlier; and strategically sequence to avoid compounding errors.

-

Time delay is prevalent in every NASA mission. It takes a certain amount of time for a signal to travel from earth to other extraplanetary regions. This ranges from: short delay at 0-2 seconds for the moon; medium delay at 5-50 seconds to a near earth asteroid; and long delay at 1 - 20 minutes to mars and beyond. In the context of robotic teleoperation, it takes 1 time delay for robot commands to be sent and an additional 1 time delay to see the results of robot’s actions, plus the time it took for the robot to actuate these commands.

-

Due to time delay, the user is always operating based on past information at two different time zones - one of the user’s, the other being that of the robot’s. There are inherent issues with this - especially for a dextrous manipulation task where there is high uncertainty on whether the robot successfully carried out the operator’s intended actions for the robot. In a human manipulation task with no robot or time delay involved such as picking up a full glass of water, errors made can be rapidly acted upon by adjusting the hand to make corrections. During a teleoperated robotic manipulation task, several challenges present themselves. How can the interface enable operators embody the robot, especially one with drastically different degrees of freedom than a human arm? Because errors tend to compound with greater uncertainty, how can the interface maximize operator accuracy to reduce input errors and accurately represent noisy robotic sensor data?

Currently, teleoperating a robot within short time delay and long time delay are both fairly well established. In short time delay of 0-2 seconds such as the Canadarm robot at the International Space Station (ISS), realtime control is utilized via joystick control through joint by joint manipulation with a translational and rotational controller. Within long delay for robots like the Mars Science Laboratory (MSL), preplanned autonomy is used where robot path plans are pre-sequenced 1-2 weeks advanced for the robot to be executed.

-

In order to make time delay manipulation possible, the design challenge was broken down into two main categories: 1) problems related to time delay, 2) problems inherent in robotic manipulation. Manipulation problems must be resolved first before time delay problems can be tackled minimizing user input time is key to getting ahead of time delay constraints.

The objective of the project is to design an interface that enables efficient operations during medium delay, is adaptable for a variety of robots, capable for use to achieve a variety of manipulation tasks, is intuitive and natural to use, promotes safe for operators and robots, and is scalable for multi-user operation.

-

It offers 4 main operations for time delay teleoperation of robots for manipulation tasks:

Functionalize environment: planning the steps to take for the robot and while specifying properties of the environment with the locations of objects based the sensor data.

Motion planning states: determining where the robot’s end effector should go and deciding what pose and rotation it should be in.

Order event and sequences: setting end effector states in a timeline to indicate at what instance in time should the robot be there

Repairing and editing: this is the same as 2. motion planning states. This comes into play when new sensor data arrives back and indicates locations of objects have changed so therefore locations of the end effectors in the environment should change.

The exact trajectory the robot should take will change constantly depending on different robots, different tasks, and at what point in the time delay the robot might be at and is very hard to control, hence not worth the planning for. By optimizing 3D control and enabling users to rapidly edit and plan simultaneously, time delay manipulation can be solved as the user input time will be dramatically reduced. Enabling users to cut out the in-betweens of trajectory editing, the user can focus their time on planning ahead. This method and interaction for control cuts down on the operation time it would take for positioning something. In realtime joystick control, user has to move down and then back up to accomplish a task through joint-level control. By increasing level of control into planning poses and start/end positions, the same task in realtime would be made faster with this control method. When an error occurs at the uncertainty point, operators can make adjustments and changes which will propagate through the entire stack. In real-time joint level control - there is no undo or ability to rewind & retrace steps accurately. In planning ahead - there is no taking back an action. Here in medium time delay, operators have the benefits of both while editing and changing things rapidly and flexibly.

-

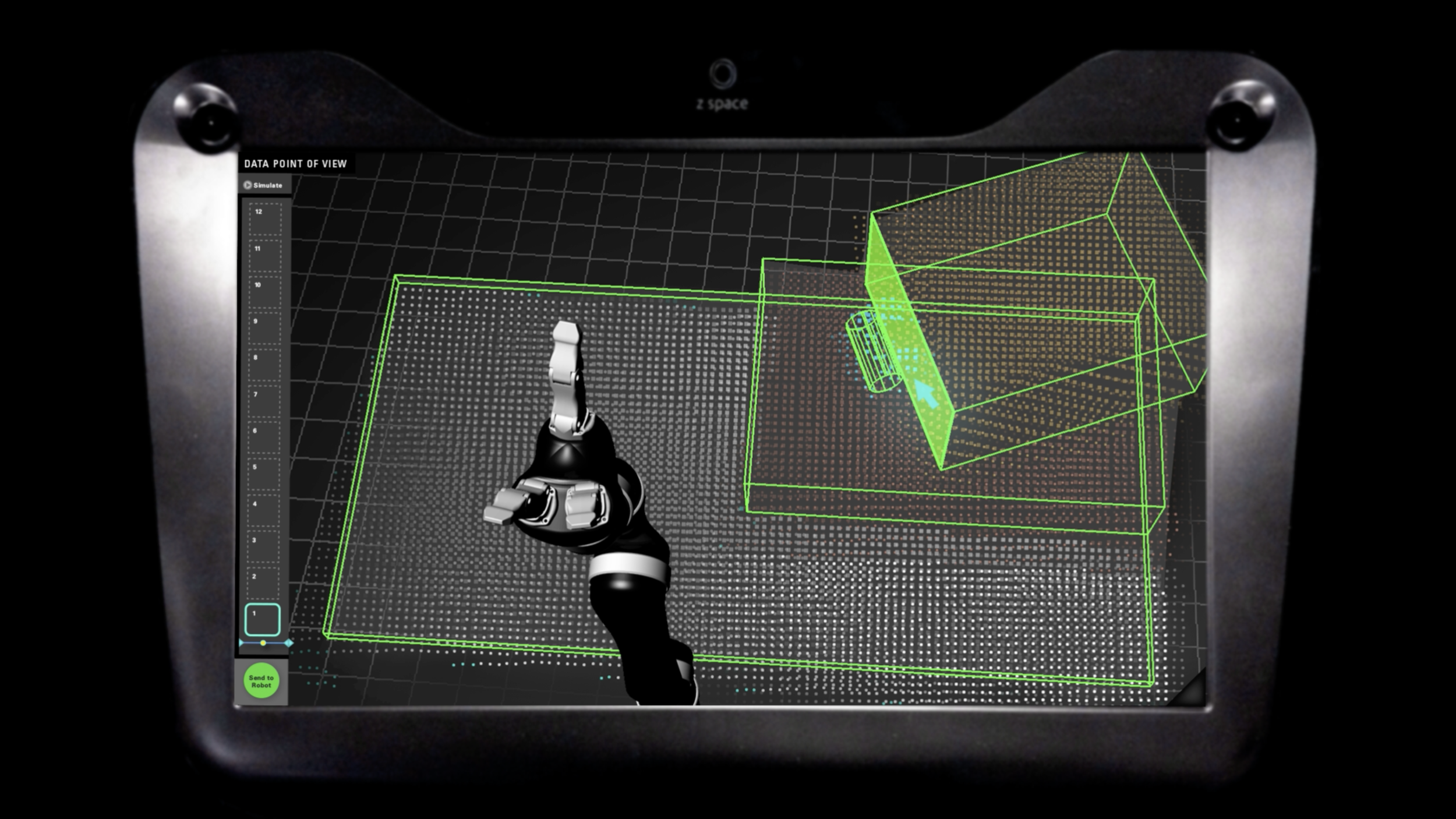

Using a zspace stereo display, the interface consists of an RGB video stream with an overlay of a 3D pointcloud, taken from the position of 2 co-registered prime sensors - placed behind the Jaco robot arm to simulate Robonaut2’s rough point of view. There is also a timeline to the left indicating the operator’s sequenced positions for commanding the robot. The numbered frames show the order in which events are to take place starting from the bottom to the top. The blue line with a centered yellow dot shows the robot’s current position in the timeline. There is also a green “send” button at the bottom of the timeline for sending finalized commands. Above the timeline is a button with a “play” icon to enable the user to simulate the robot’s trajectory before sending it to the robot. Within this task scenario, the operator must generate a sequence in the timeline for the robot to open the drawer within a 30-50 second time delay, so that the object within can be revealed.

Process

KINESTHETIC PERCEPTION STUDIES

A literature review and series of exploratory studies were conducted to understand human kinesthetic, haptic, and gestural perception to support interface design. Example studies included blindfolded tasks, kinematic paper prototypes, degree of freedom mapping, and 2D teleoperation studies.

TASK ANALYSIS

Real-world and virtual manipulation tasks were analyzed by simulating task scenarios that a remote operator may encounter in order to understand task sequences, dependencies, and error hot spots.

INPUT-OUTPUT DEVICE ANALYSIS

User input, system output, and task contexts were studied in depth. A series of off the shelf input and output devices were qualitatively evaluated with users under simulated conditions to carry out a series of manipulation tasks.

LOW FIDELITY PROTOTYPES

A variety of low fidelity paper prototypes were created. User studies were conducted using a variety of techniques that include wizard of oz techniques, video prototypes, wireframes, and role playing exercises prior to implementation.

INTERACTION STORYBOARD

Storyboard sketches and drawings were produced in the design process. The documents were used to develop ideas further, communicate with the software team, and iterate on the user flow.

USER FLOW

User flow for operating the robotic interface was diagrammed and visualized based on iterative usability tests.

HIGH FIDELITY PROTOTYPES

Various interaction techniques were tested with users. Prime sensors were mounted to model Robonaut’s vision system. The sensor data provided a 3d depth map in the form of a point cloud for remote viewing by operators. Kinova Jaco robot arm was used to test different interface designs.

Client: NASA Jet Propulsion Lab